|

Dongyang Li (李东洋) Hi! 👋😋. I am currently a 3rd-year M.S. student in NCClab@SUSTech, major in Electronic Engineering at Southern University of Science and Technology, Shenzhen, China. My supervisor is Prof. Quanying Liu. I have previously closely worked with Prof. Chao Zhang. Now I am also closely working with Dr. Shaoli Huang. My major research interests include using Multimodal Large Language models (MLLMs) to build Brain-Computer Interface (BCI), and then to promote the development of Embodied AI and NeuroAI, etc. My mission is to architect the next generation of General AI by synthesizing the principles of brain intelligence with the power of MLLMs. I aim to decode the neural foundations of perception and action, translating them into robust physical and linguistic intelligence, thereby enabling machines that truly understand and collaborate with humanity . 🎯 Actively applying for Fall 2026 PhD programs - Feel free to reach out! Feel free to contact me by email if you are interested in discussing or collaborating with me. |

|

News

- [12/2025] One journal paper is accepted to Scientific Data. [Paper] [Code] |

Selected Publications |

|

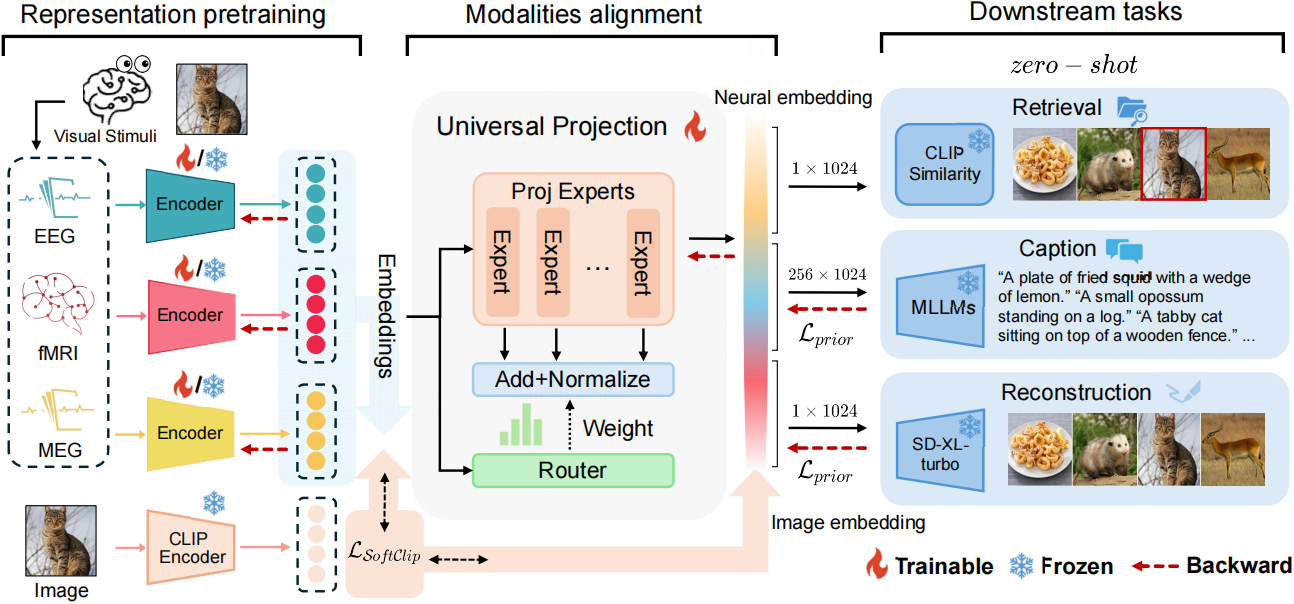

BrainFLORA: Uncovering Brain Concept Representation via Multimodal Neural Embeddings

Dongyang Li*, Haoyang Qin*, Mingyang Wu, Chen Wei, Quanying Liu ACM MM, 2025, Oral Project page / arXiv / Github We present BrainFLORA, the first framework that aligns EEG, MEG and fMRI into a shared neural embedding with a multimodal universal projector, setting a new bar for cross-subject visual multi-task decoding while uncovering the brain's hidden map between concepts and real-world objects. |

|

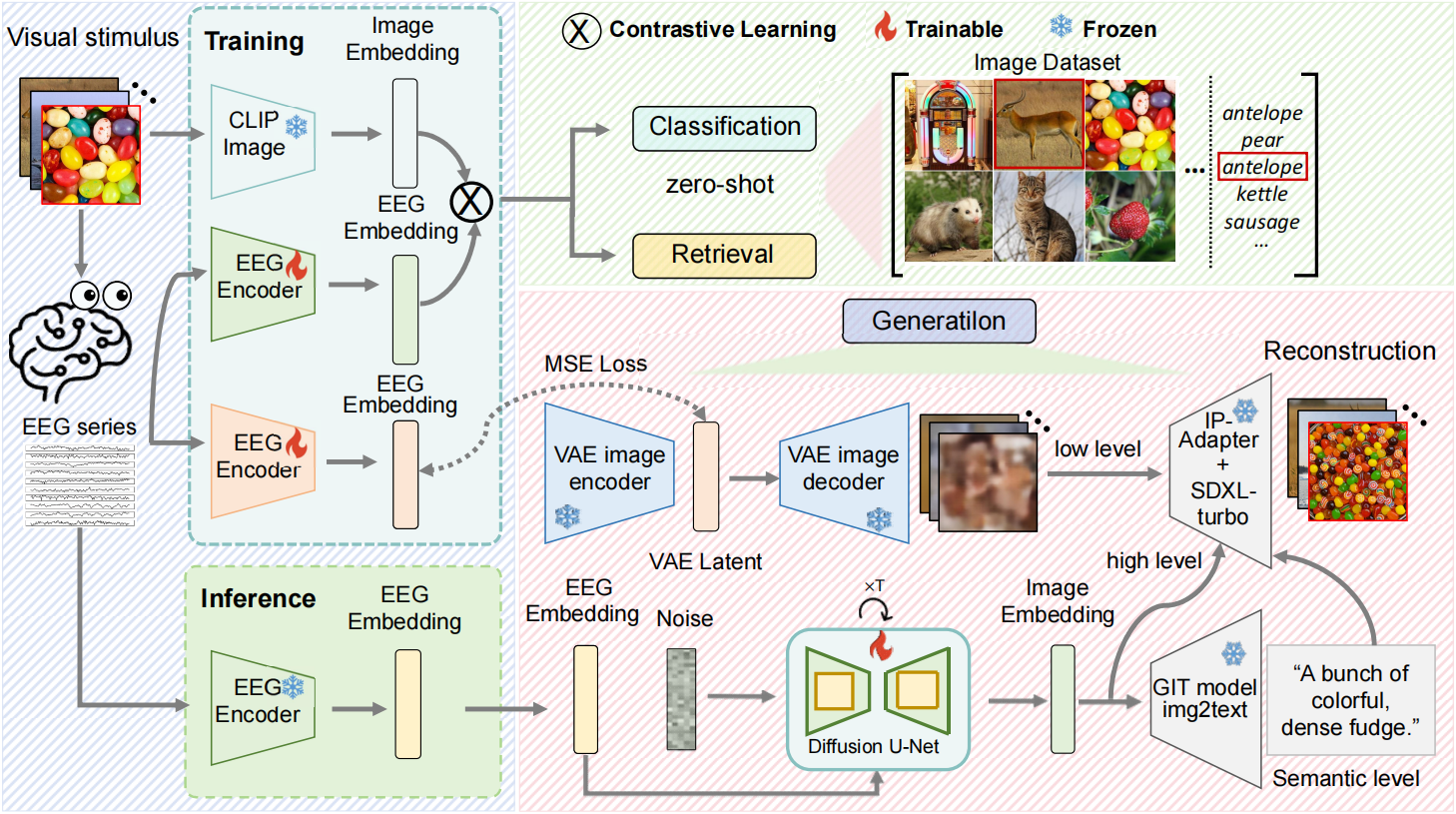

Visual Decoding and Reconstruction via EEG Embeddings with Guided Diffusion

Dongyang Li*, Chen Wei*, Shiying Li, Jiachen Zou, Quanying Liu NeurIPS, 2024, Poster Project page / arXiv / Github We introduce the first zero-shot EEG-to-image reconstruction pipeline: with our brain encoder and two-stage guideddiffusion we can directly reconstruct what you're seeing from cheap, millisecond-level brainwaves—setting new state-of-the-art benchmarks in classification, retrieval and generation and making everyday EEG-based visual decoding finally practical. |

Education |

|

Southern University of Science and Technology Sep 2023 - Jun 2026 (expected) M.Sc. in Electronic Science and Technology |

|

Zhengzhou University Sep 2019 - Jun 2023 B.Eng. in Computer Science and Technology |

Experience |

|

AGIBot Oct 2025 - Present

Research Intern

|

|

Shanghai AI Laboratory May 2025 - Oct 2025

Research Intern

|

Academic Services

Conference Reviewer for ICLR, ICML, NeurIPS, ACL, KDD, AAAI. |

|

Webpage templete is borrowed from this |